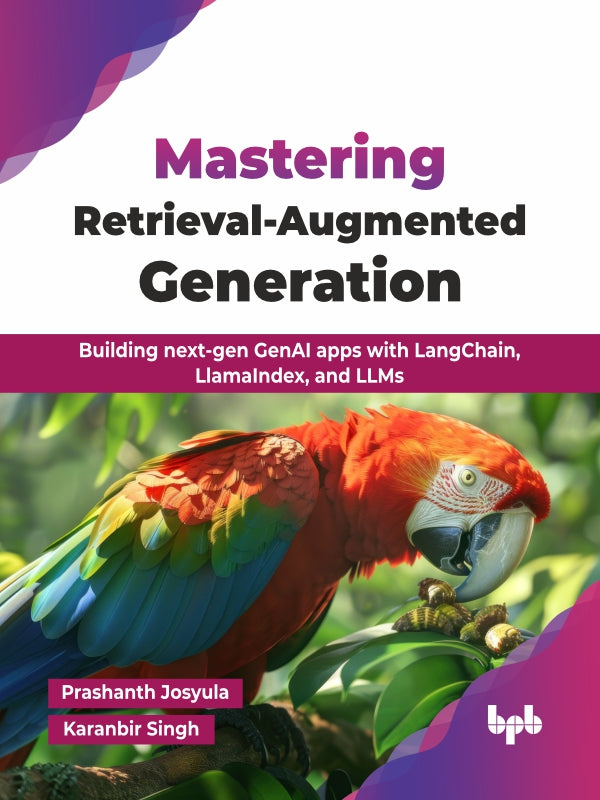

Mastering Retrieval-Augmented Generation

FREE PREVIEW

ISBN: 9789365897241

eISBN: 9789365896688

Authors: Prashanth Josyula, Karanbir Singh

Rights: Worldwide

Edition: 2025

Pages: 394

Dimension: 7.5*9.25 Inches

Book Type: Paperback

DESCRIPTION

Large language models (LLMs) like GPT, BERT, and T5 are revolutionizing how we interact with technology — powering virtual assistants, content generation, and data analysis. As their influence grows, understanding their architecture, capabilities, and ethical considerations is more important than ever. This book breaks down the essentials of LLMs and explores retrieval-augmented generation (RAG), a powerful approach that combines retrieval systems with generative AI for smarter, faster, and more reliable results.

It provides a step-by-step approach to building advanced intelligent systems that utilize an innovative technique known as the RAG thus making them factually correct, context-aware, and sustainable. You will start with foundational knowledge — understanding architectures, training processes, and ethical considerations — before diving into the mechanics of RAG, learning how retrievers and generators collaborate to improve performance. The book introduces essential frameworks like LangChain and LlamaIndex, walking you through practical implementations, troubleshooting, and optimization techniques. It explores advanced optimization techniques, and offers hands-on coding exercises to ensure practical understanding. Real-world case studies and industry applications help bridge the gap between theory and implementation.

By the final chapter, you will have the skills to design, build, and optimize RAG-powered applications — integrating LLMs with retrieval systems, creating custom pipelines, and scaling for performance. Whether you are an experienced AI professional or an aspiring developer, this book equips you with the knowledge and tools to stay ahead in the ever-evolving world of AI.

WHAT YOU WILL LEARN

● Understand the fundamentals of LLMs.

● Explore RAG and its key components.

● Build GenAI applications using LangChain and LlamaIndex frameworks.

● Optimize retrieval strategies for accurate and grounded AI responses.

● Deploy scalable, production-ready RAG pipelines with best practices.

● Troubleshoot and fine-tune RAG pipelines for optimal performance.

WHO THIS BOOK IS FOR

This book is for AI practitioners, data scientists, students, and developers looking to implement RAG using LangChain and LlamaIndex. Readers having basic knowledge of Python, ML concepts, and NLP fundamentals would be able to leverage the knowledge gained to accelerate their careers.

TABLE OF CONTENTS

1. Introduction to Large Language Models

2. Introduction to Retrieval-augmented Generation

3. Getting Started with LangChain

4. Fundamentals of Retrieval-augmented Generation

5. Integrating RAG with LangChain

6. Comprehensive Guide to LangChain

7. Introduction to LlamaIndex

8. Building and Optimizing RAG Pipelines with LlamaIndex

9. Advanced Techniques with LlamaIndex

10. Deploying RAG Models in Production

11. Future Trends and Innovations in RAG

ABOUT THE AUTHORS

Prashanth Josyula is currently a Principal Member of Technical Staff (PMTS) at Salesforce AI Cloud, where he uses his over 16 years of industry experience to turn ideas into impactful realities. His career, which began in 2008, has been a dynamic exploration of various technological landscapes based on continuous learning, experimentation, and leadership principles.

He formerly worked at IBM, Bank of America, ADP, and Optum, where he gained extensive experience across a variety of disciplines. He is a seasoned polyglot programmer, fluent in several programming languages, including Java, Python, Scala, Kotlin, JavaScript, TypeScript, Shell Scripting, and SQL. Beyond languages, he has a deep understanding of evolving technologies and contributions to a huge ecosystem of open-source solutions, which enable him to architect resilient systems, design user- friendly interfaces, and extract insights from massive datasets.

He has engineered scalable, resilient backend systems using Java/JavaEE, Python, Scala, and Spring, and his expertise in UI technologies such as ExtJS, JQuery, DOJO, Angular, and React has allowed him to create visually appealing, user-friendly interfaces. His proficiency in Big Data technologies such as Hadoop, Spark, Hive, Oozie, and Pig has allowed him to identify patterns and convert raw data into strategic insights. Furthermore, his extensive involvement in microservices and infrastructure via Kubernetes, Helm, Terraform, and Spinnaker has refined his technical skills and resulted in substantial open-source contributions.

AI and ML remain at the heart of his current endeavors as he continues to push the boundaries of intelligent systems, constantly attempting to innovate and redefine what is possible. With a career distinguished by constant change, Prashanth feels that every obstacle presents an opportunity for innovative discovery, motivating him to push the boundaries of technology and leave a lasting influence, his quest is driven by relentless curiosity and a desire for innovation, and he finds joy in creating not just code but sophisticated, cutting-edge solutions that push the boundaries of what is possible.

Karanbir Singh is an accomplished engineering leader with almost a decade of experience leading AI/ML engineering and distributed systems. He is a senior software engineer at Salesforce and is an active contributor to the AI and software engineering community, frequently speaking at industry-leading conferences such as GDG DevFests and AIM 2025. His participation as a reviewer in top-tier AI conferences like AAAI 2025, ICLR 2025, CHIL 2025, IJCNN 2025, and as an author in the Web Conference (WWW ‘25), IEEE PDGC-2024, ICISS-2025 is a testament to his expertise and thought leadership in cutting-edge technologies such as retrieval-augmented generation (RAG), AI agents, responsible AI and time series analysis.

He also featured in the Data Neighbors Podcast on YouTube, where he shared his key insights on the future of AI-driven decision-making and retrieval systems. The podcast has hosted several notable AI experts, including Josh Starmer (StatQuest), highlighting the platform’s industry recognition.

At TrueML, as an engineering manager, he led a critical team that was responsible for developing and deploying ML models in production. His leadership directly contributed to increased revenue, client retention and substantial cost savings through innovative solutions. His role involved not only steering technical projects but also shaping the company’s roadmap in partnership with data science, product management, and platform teams. At Lucid Motors and Poynt, he developed critical components and integrations that advanced product capabilities and strengthened industry partnerships.

He holds a master’s degree in computer software engineering from San Jose State University, CA, USA and has been recognized for his innovative contributions, including winning the Silicon Valley Innovation Challenge. He is passionate about mentoring and coaching emerging talent and thrives in environments where he can leverage his skills to solve complex problems and advance technological initiatives.

Choose options